User experience (UX) research relies heavily on understanding the thoughts, motivations, and pain points of target users. While interviews and surveys are crucial tools, they often have inherent limitations. Interviews can be time-consuming to conduct and analyze, and surveys can lack the depth to reveal truly meaningful insights.

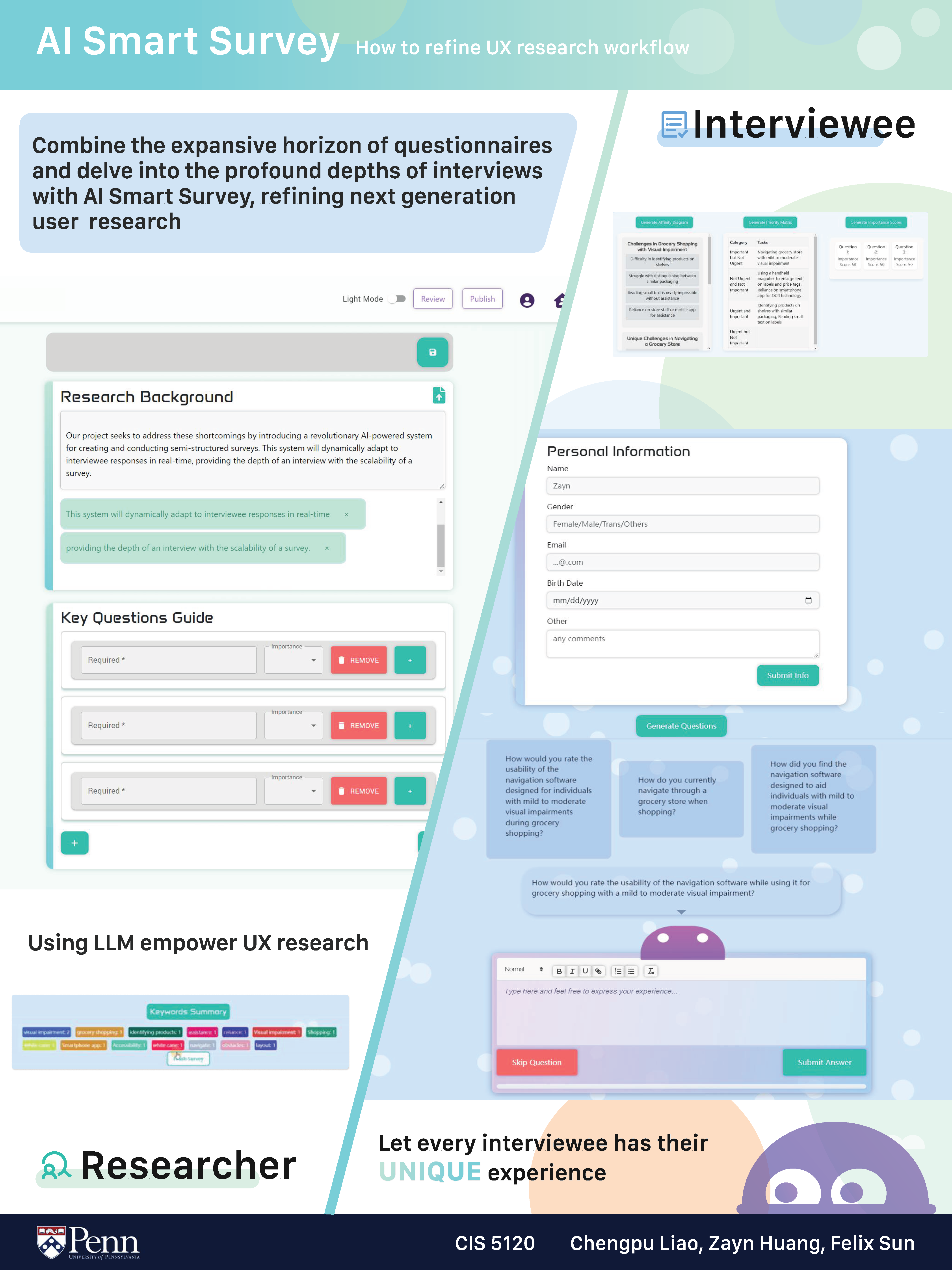

Our project seeks to address these shortcomings by introducing a revolutionary AI-powered system for creating and conducting semi-structured surveys. This system will dynamically adapt to interviewee responses in real-time, providing the depth of an interview with the scalability of a survey.

The inspiration for this project came from recognizing a few key issues with traditional UX research methods:

Static Questionnaires: Rigid surveys force users down a predetermined path, even if their responses indicate that a different line of questioning might be more fruitful.

Time-Intensive Analysis: Combining through interview transcripts and large quantities of survey data to uncover patterns is a manual and time-consuming process for researchers.

Missed Opportunities: Without the ability to adapt questioning in real-time, researchers may miss out on key insights that could provide a more complete picture of user needs.

We realized that an AI-driven solution could solve these problems. Consider these potential features:

Adaptive Questioning: The system could analyze responses and intelligently determine the most relevant follow-up questions, leading to more focused and insightful data collection.

Real-time Insights: As responses are collected, the AI could look for patterns and themes, providing researchers with valuable insights earlier in the process.

By combining these ideas, we aim to create a system that empowers UX researchers to collect richer, more actionable data, all while saving time and resources.

1. Research Goals

The primary research goal here is to understand the tools and methodologies that UX professionals rely on for user research, particularly focusing on the role and effectiveness of questionnaires in gathering user insights. The aim is to gain a comprehensive understanding of how different user research methods are selected and used based on specific project requirements. This involves exploring what tools and approaches are preferred, what factors influence their selection, and how questionnaires are used in various contexts. Additionally, the goal includes identifying the strengths and limitations of questionnaires, how frequently they're employed, and how they compare with other user research methods. The findings will help inform best practices in user research and provide insights into potential new tools and techniques that UX professionals could find useful.

2. Interview Guide

Introduction

Hi! Thank you so much for taking the time to speak to us. I’m Chengpu, I am a master's student at the University of Pennsylvania. Today, we’d love to ask you some questions about user research. All sensitive data will be destroyed.

Kick-off/ Warm-up

Can you tell me a little bit about your background and how you got into UX design/research?

What projects are you currently working on/ Which project is your most proud. Could you walk through it?

Main Questions

What primary tools and methods do you rely on for user research in your projects? Can you walk me through how you typically use these tools in a project lifecycle?

How do you decide which user research methods or tools to use for a particular project? What factors influence your choice?

In your experience, how effective are questionnaires in gathering user insights compared to other user research methods?

Reflecting on your user research process, what aspects do you find most rewarding, and what aspects do you find most challenging?

Is there a tool or method in UX research you're excited about or would like to experiment with in the future?

How frequently do you use questionnaires in your user research, and in what contexts do they seem most appropriate or valuable?

From your experience, what are the strengths and limitations of using questionnaires for gathering user insights?

What online questionnaire platforms do you use, please also talk about its pro/con.

Can you share an example where a questionnaire provided unexpected insights or failed to capture necessary information? How did you adapt?

Demographic

Can you please share your current job title and the type of organization you work for? (e.g., startup, large corporation, non-profit)

How many years of experience do you have in UX design or UX research?

What is your educational background? (Field of study and level of education)

Can you briefly describe the primary user base or audience you design/research for in your current role?

Recruiting Plan

Target Population: Our research targets UX Designers and UX Researchers currently engaged in the field, with varying levels of experience, and working in diverse organizational settings (startups, large corporations, non-profits). The primary aim is to understand their user research methodologies, tool preferences, and perspectives on questionnaires within their work processes.

Recruitment Strategy: We plan to recruit participants through personal network and use snowball method to recruit more.

Number of Participants: Our goal is to conduct interviews with 4 UX professionals(2 entry, 2 senior). Depending on the initial findings and diversity of insights, we may extend the interviews to more participants to ensure a broad representation of experiences and perspectives.

Recruitment Message:

Subject: Invitation to Contribute to UX Research Study at the University of Pennsylvania

Dear [Name],

I hope this message finds you well. My name is Chengpu, and I am a master's student at the University of Pennsylvania, currently engaged in a research project focused on understanding the tools and methodologies employed by UX professionals in their user research processes.

Your expertise and experience in UX design/research would offer invaluable insights into the current practices and perspectives within the field, especially regarding the use and effectiveness of questionnaires.

We are seeking participants for a brief interview (approximately 30 minutes) at your convenience. Your participation would be strictly confidential, and all sensitive information will be anonymized.

If you are interested or would like more information, please let me know. I am looking forward to potentially discussing this further with you.

Thank you for considering this request.

Best regards,

Chengpu

Informed Consent Script

"Hello, and thank you for agreeing to participate in our interview today. My name is Chengpu, a master's student at the University of Pennsylvania. Our discussion will focus on your experiences with user research methodologies, specifically the tools and methods you employ, and your perspectives on questionnaires.

Before we begin, I want to assure you that your participation is entirely voluntary, and you may withdraw at any time without any consequences. The interview will last about 30-45 minutes, and with your permission, we will record the session to ensure accuracy in capturing your responses. All recordings and data will be securely stored and accessible only to the research team.

Your responses will be anonymized, and any identifiable information will be removed to maintain confidentiality. The insights gathered will contribute to academic research on UX practices and may be shared in academic forums, with all due care to protect participant identities.

There are minimal risks associated with this interview, primarily related to the discussion of professional experiences. Should any questions make you uncomfortable, you have the right to skip them or end the interview at any point.

Do you have any questions before we start? And if everything is clear, may I have your consent to proceed and record this interview?

3. Interview Findings

Diverse Research Methods

UX professionals use a mix of research methods including interviews, usability testing, A/B testing, and ethnographic studies.

The selection of methods often depends on the project's complexity, available resources, and the type of data required.

Effectiveness of Questionnaires

Questionnaires are seen as valuable for gathering quantitative insights and validating assumptions across a broad audience.

They are particularly useful for understanding usability and user satisfaction in later stages of development.

Limitations include a lack of depth, potential biases in design, and the challenge of eliciting meaningful insights.

Preference for Qualitative Methods

Many UX designers prefer in-depth qualitative methods like interviews and ethnographic studies over questionnaires, finding them more effective for understanding user motivations and behaviors.

Use of Questionnaire Platforms

Platforms like Google Forms, SurveyMonkey, and Typeform are used for their simplicity, flexibility, and user-friendly design.

Some teams avoid questionnaires due to data security concerns or the need for richer insights.

Challenges and Rewards

The most rewarding aspect is seeing how designs positively impact users' experiences.

Challenges include recruiting representative users, aligning stakeholders' visions with user needs, and balancing limited timeframes and budgets.

Opportunities for Future Tools

Interest is growing in leveraging AI for analyzing qualitative data, integrating VR into user testing, and adopting advanced A/B testing platforms that incorporate machine learning.

4. User Persona

Name: Sarah, the Busy Professional

Demographics: 32 years old, married, lives in an urban area, works as a project manager.

Psychographics: Career-driven, values efficiency and productivity, enjoys staying active, and loves new tech gadgets.

Goals and Needs: Improve work-life balance, streamline daily tasks, and find time for hobbies.

Pain Points: Struggles with time management due to long work hours and balancing personal life.

Behavior Patterns: Frequently uses productivity apps, shops online, and listens to podcasts during commutes.

Preferred Channels: Responds well to email newsletters and business-focused social media groups.

Quote: "I need tools that help me be more productive without adding more stress."

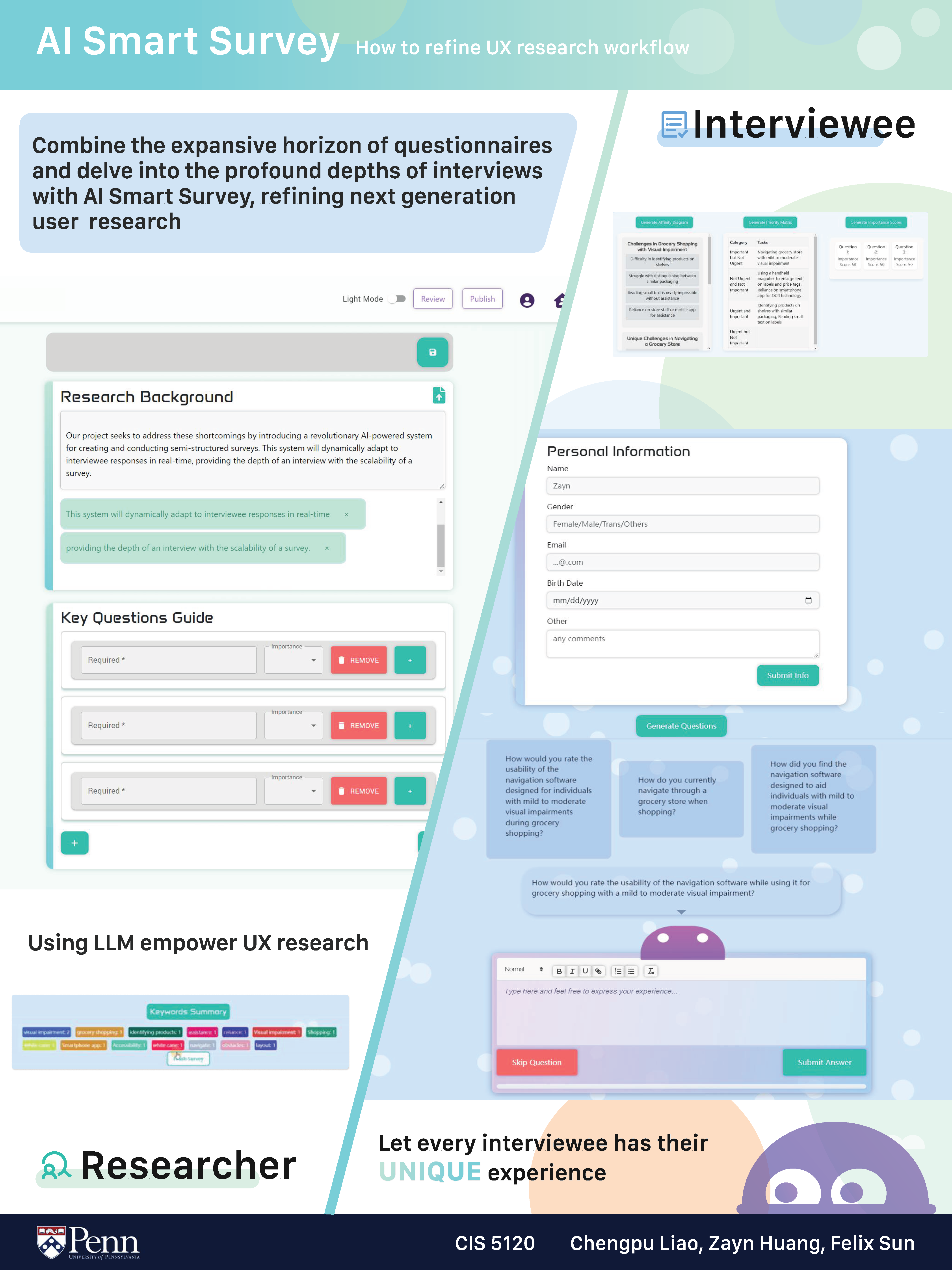

Prototype Development & Review

We began with paper prototypes to map out the core user flow, decision points, and how our adaptive logic would enhance their interaction with the survey. User testing at this early stage helped validate our question structure and refine the system for optimal clarity.

As we moved to high-fidelity prototypes, UX principles guided our visual design choices. We focused on an intuitive interface, clear navigation, and an aesthetically pleasing experience to ease the survey-taking process. User feedback was critical in ensuring these design elements resonated positively with participants. Finally, the interactive prototype allowed us to test the dynamic UI adjustments driven by the AI engine, ensuring a seamless and personalized UX. User testing was key in making sure the system felt intuitive and responsive.

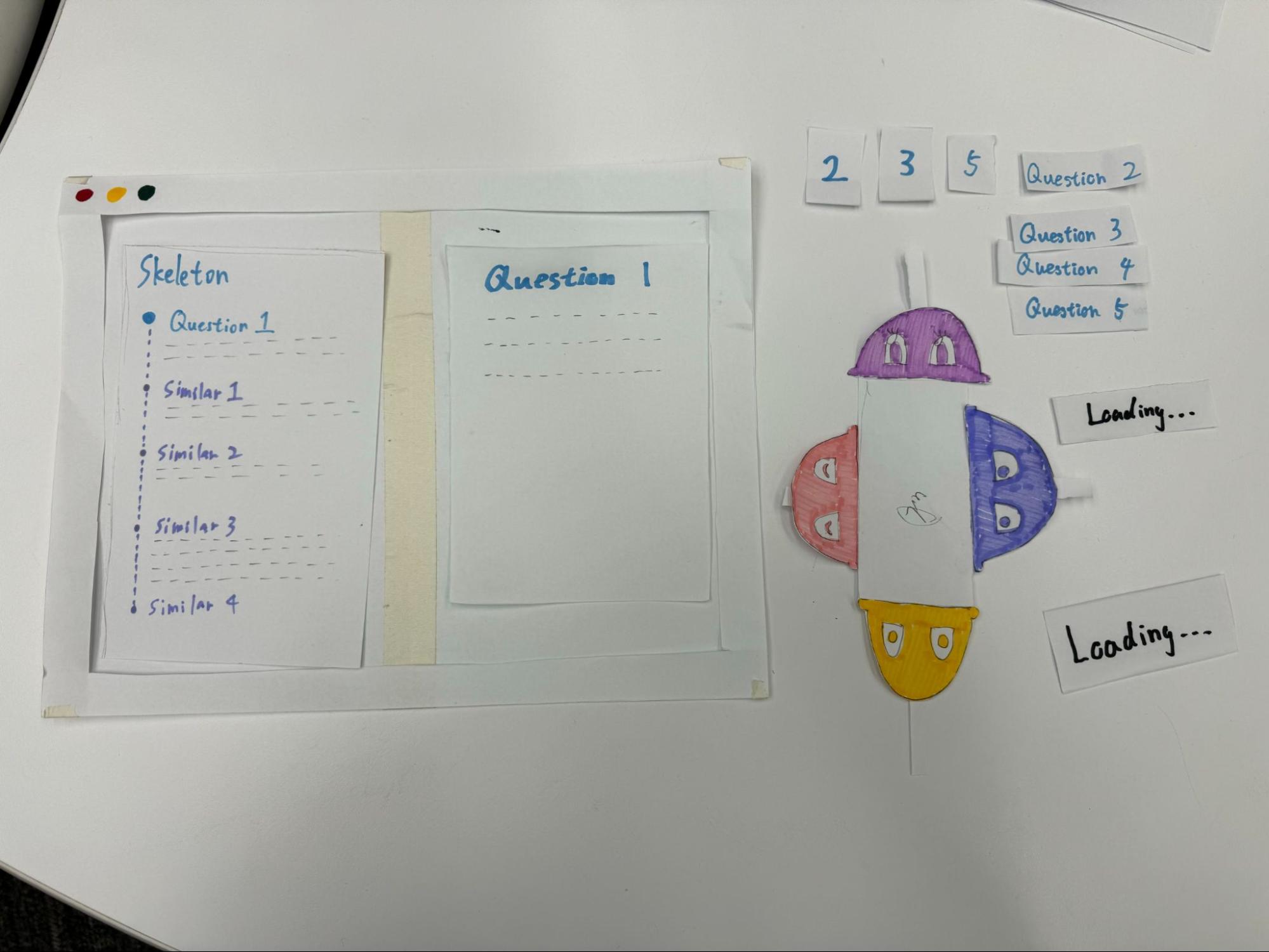

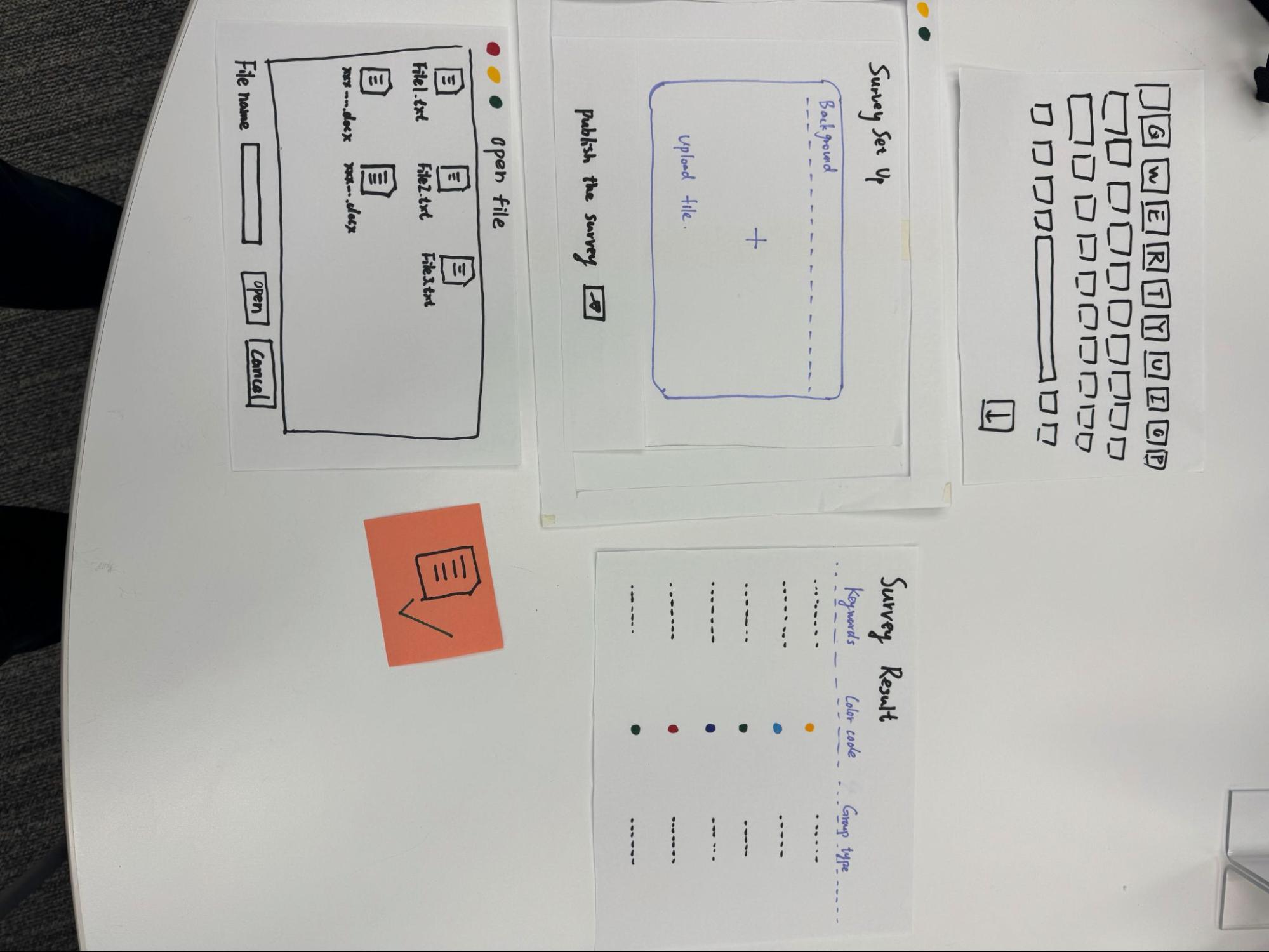

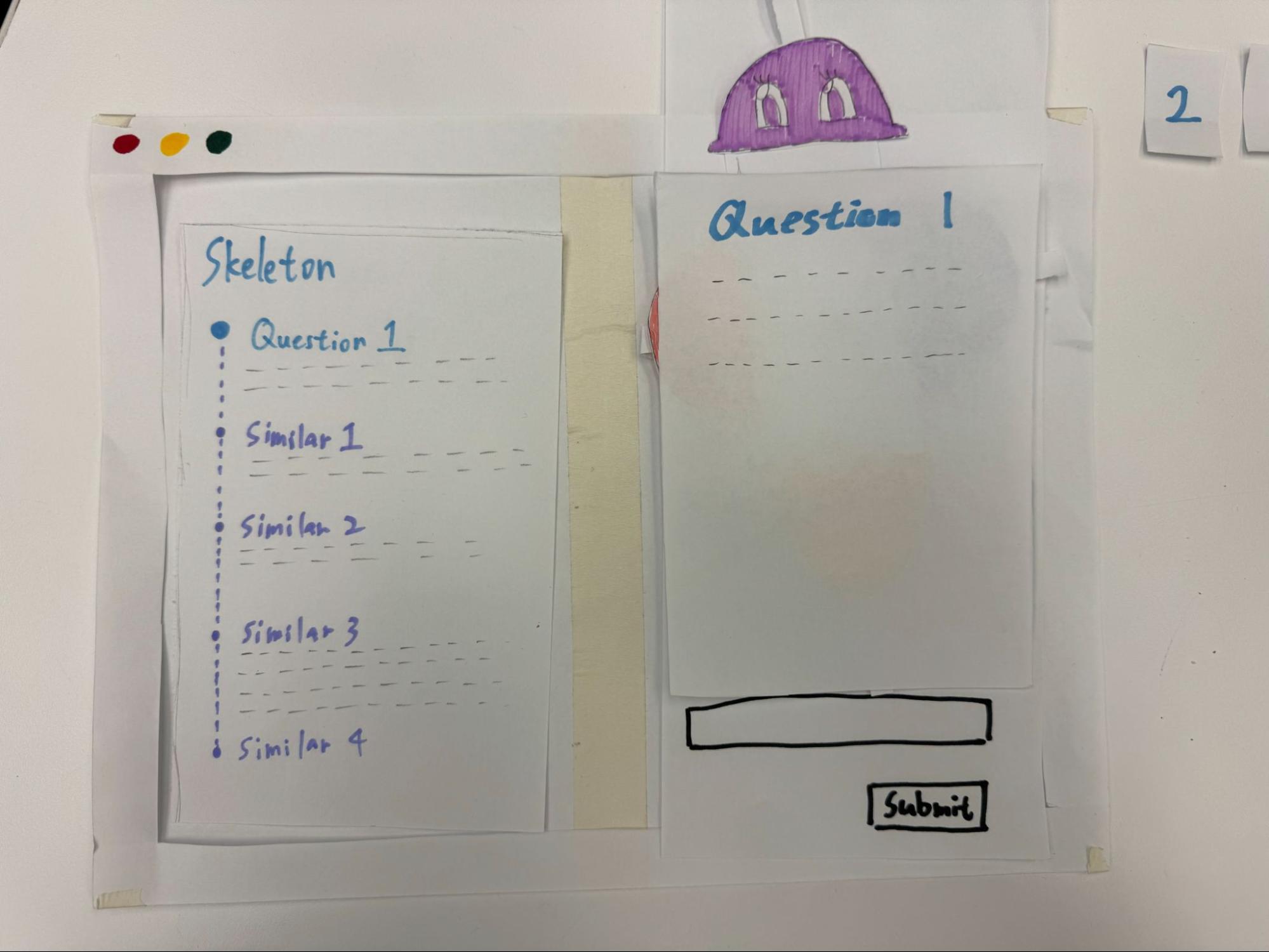

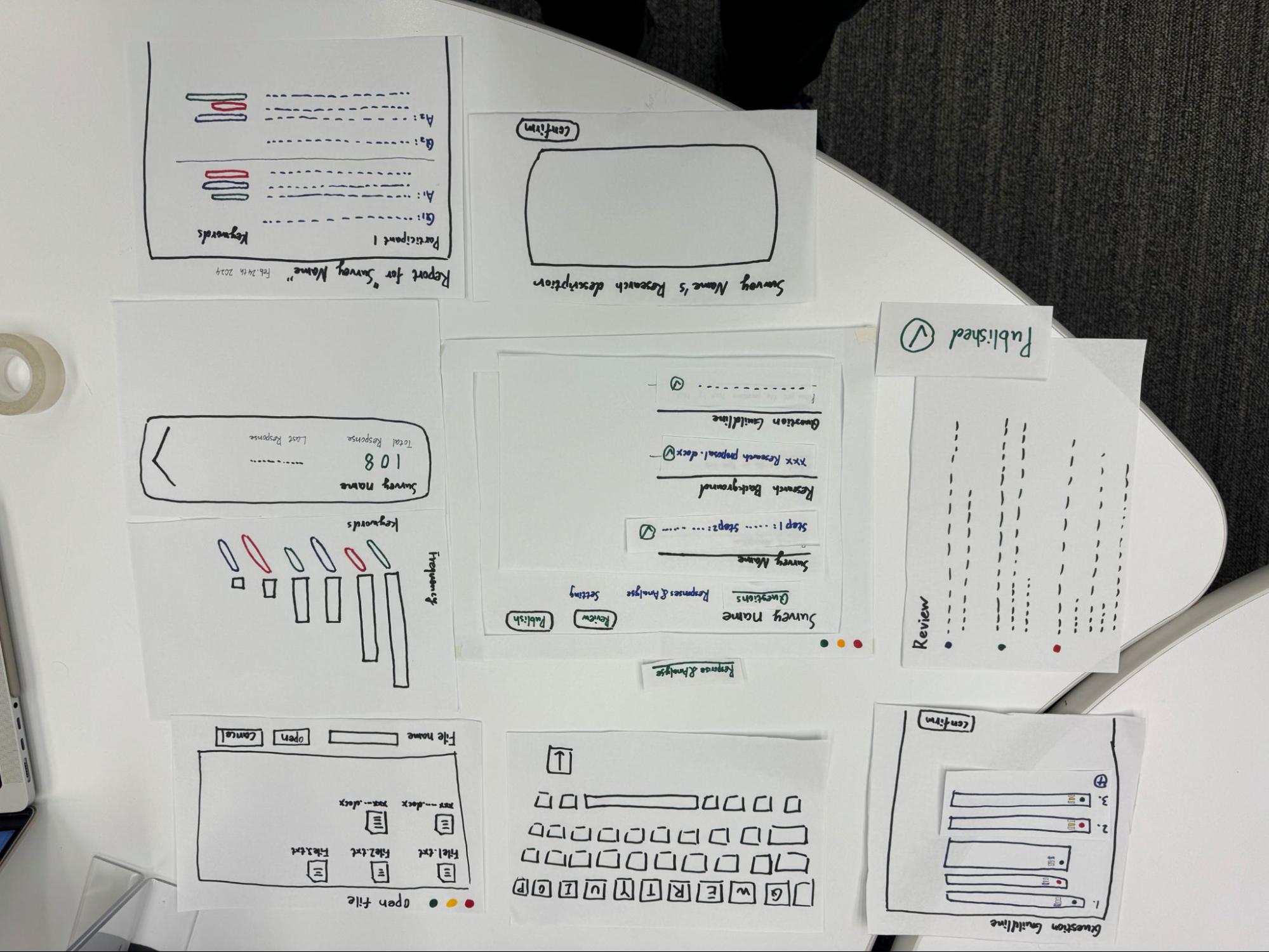

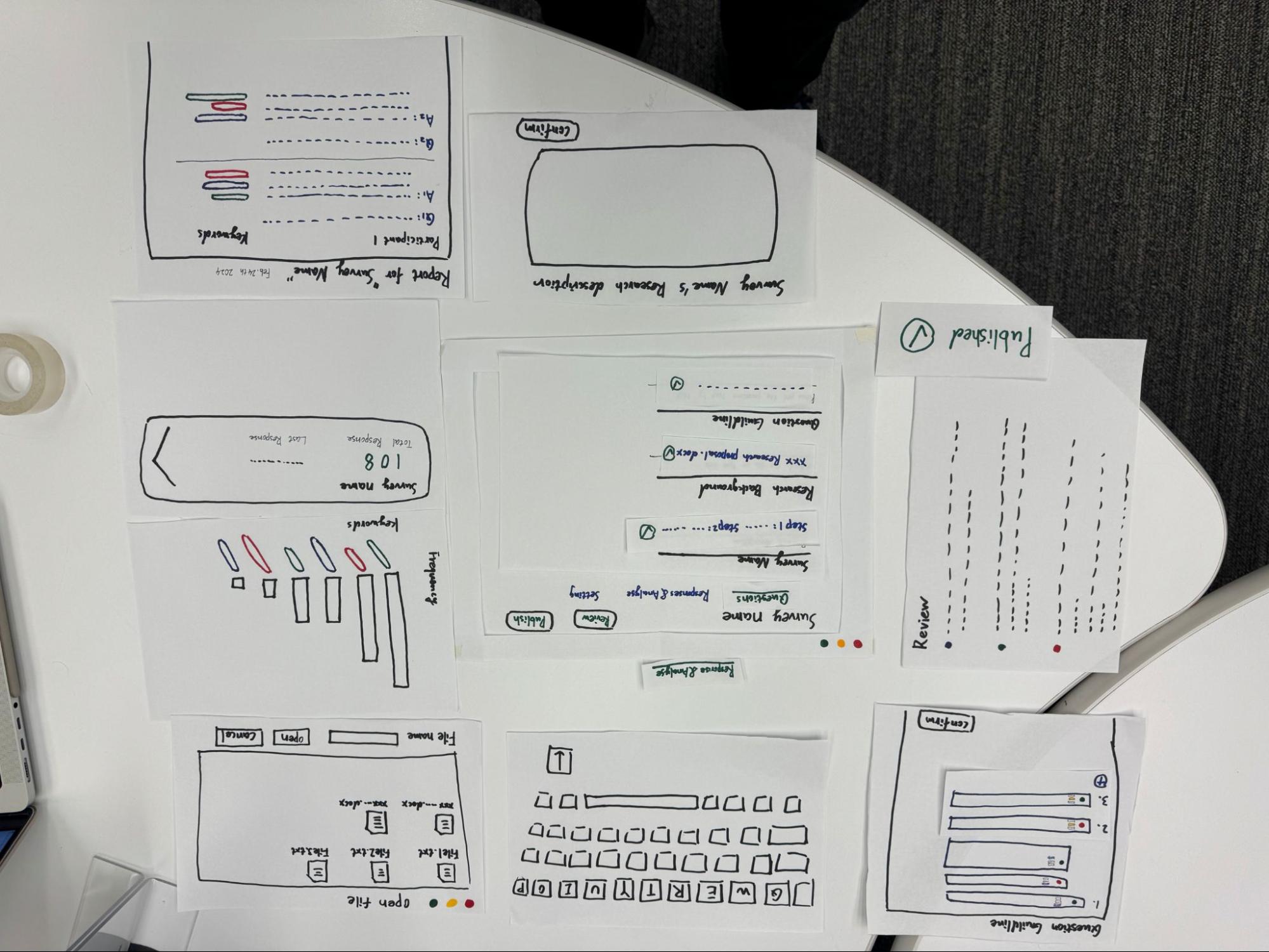

1. Lo-Fi Prototyping

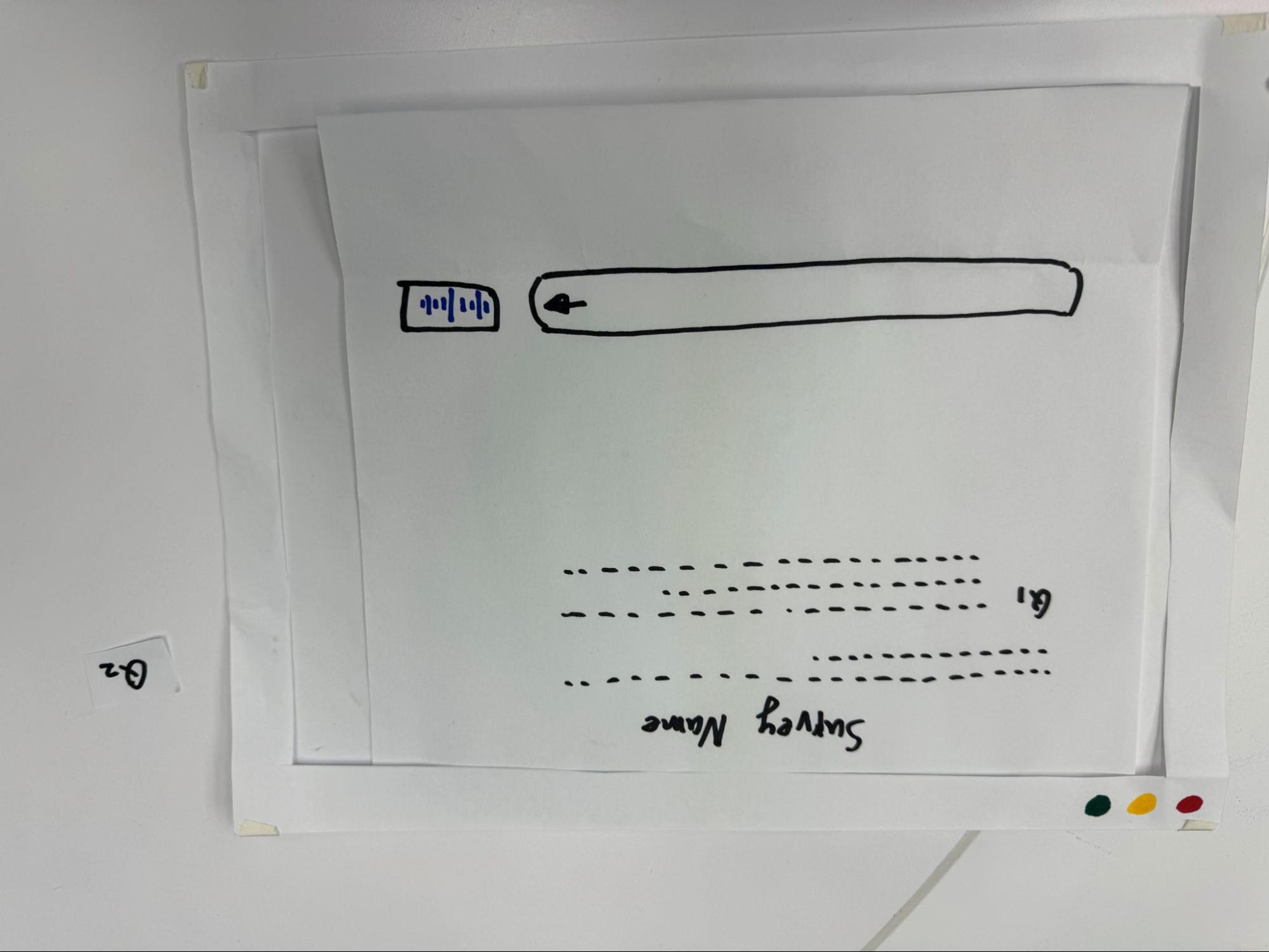

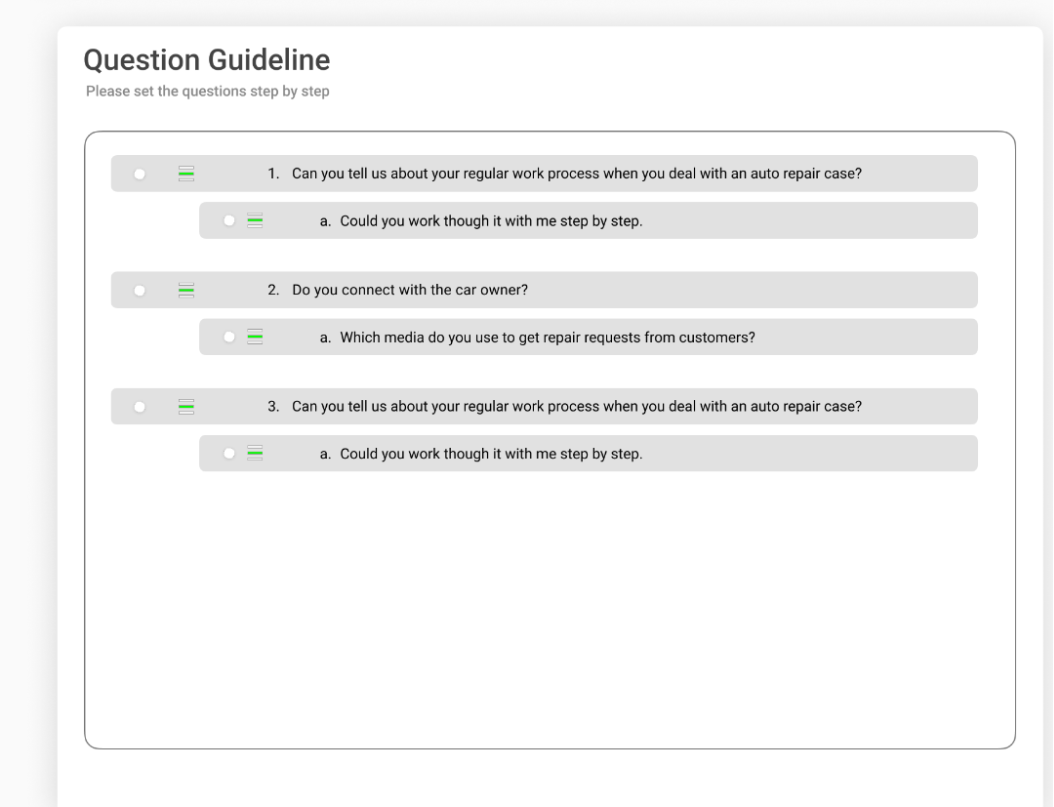

We began with two distinct hand-drawn paper prototypes to explore different UI designs and workflows for our AI-powered survey system. This approach adhered to the low-fidelity, modular principles of the assignment, allowing us to focus on core functionality and interaction concepts. Key design considerations included question branching, skip logic, response analysis markers, and the addition of a review page for participants. These prototypes were essential in testing different approaches and ensuring flexibility in the design.

Through a user evaluation process, we refined our survey questions, established design guidelines, and implemented UI improvements including a skip option, updated icons, and a review page to enhance user control and clarity. This evaluation involved a diverse user panel consisting of a UX researcher, product manager, customer support representative, non-technical user, and technical user. Their valuable insights helped us identify the system's strengths in dynamic questioning and natural language processing (NLP) – both of which streamline the interview and analysis processes for researchers.

However, the evaluation also revealed areas for improvement, particularly in accurately interpreting conversational nuances and accommodating diverse user needs. To address these challenges, we envision a future iteration with an enhanced NLP algorithm and a more robust user profiling system, ultimately delivering personalized and inclusive experiences. The feedback from our evaluators will be instrumental in guiding these advancements and ensuring the system remains effective and user-friendly for all potential interviewees.

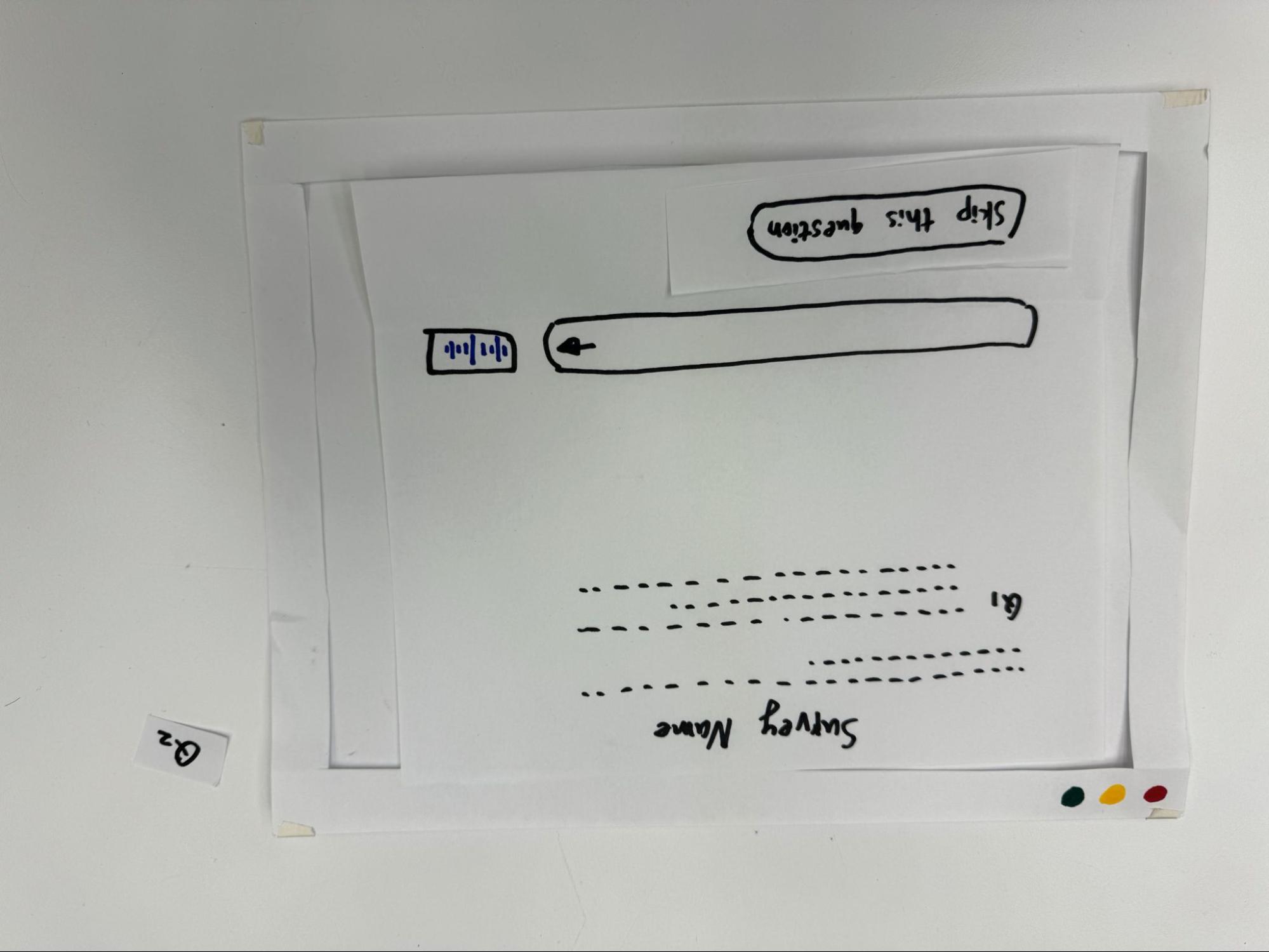

We improved the questions and set up guidelines. Add a skip for interviewee, add the updated mark/icon, add a review page.

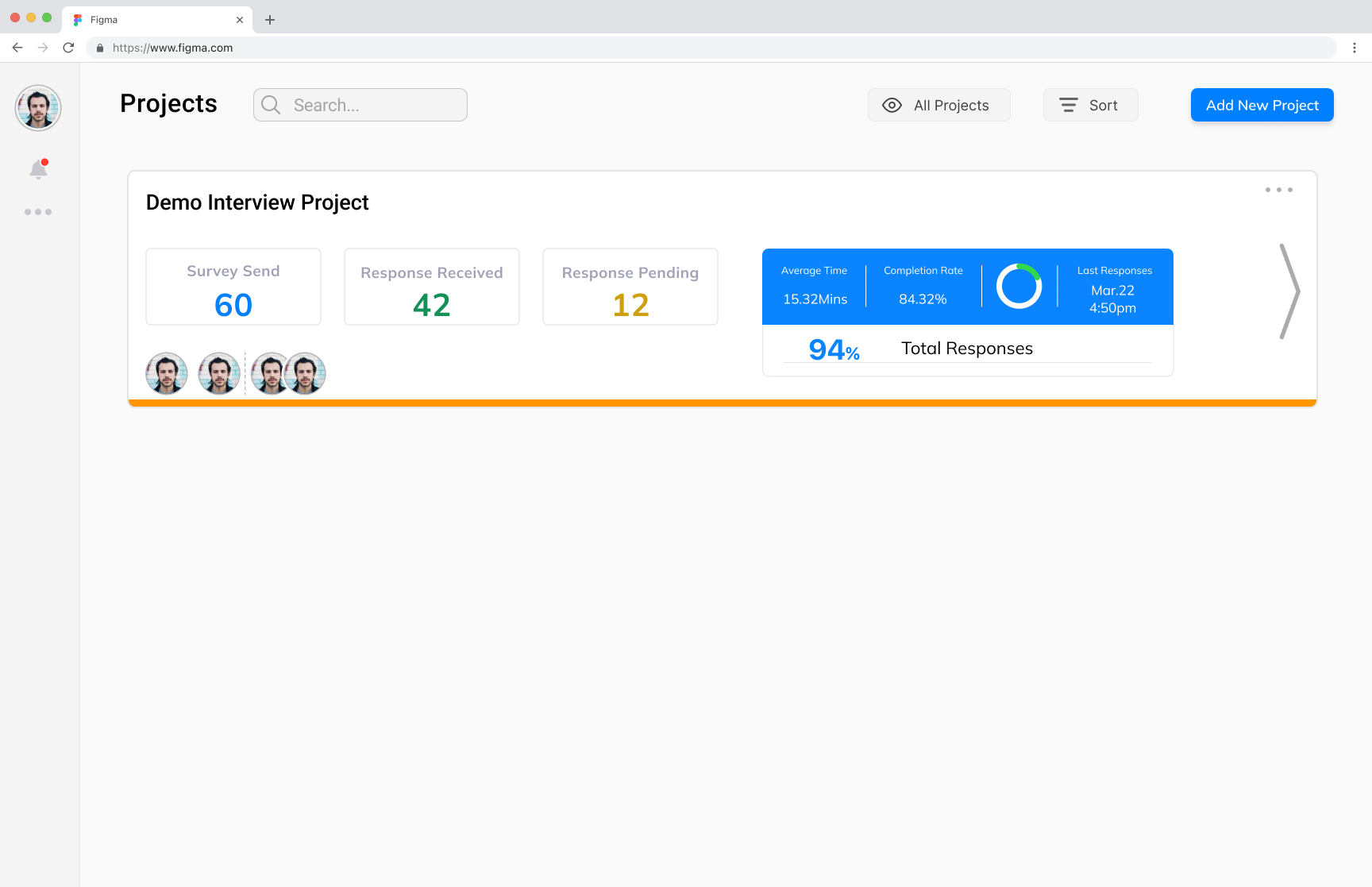

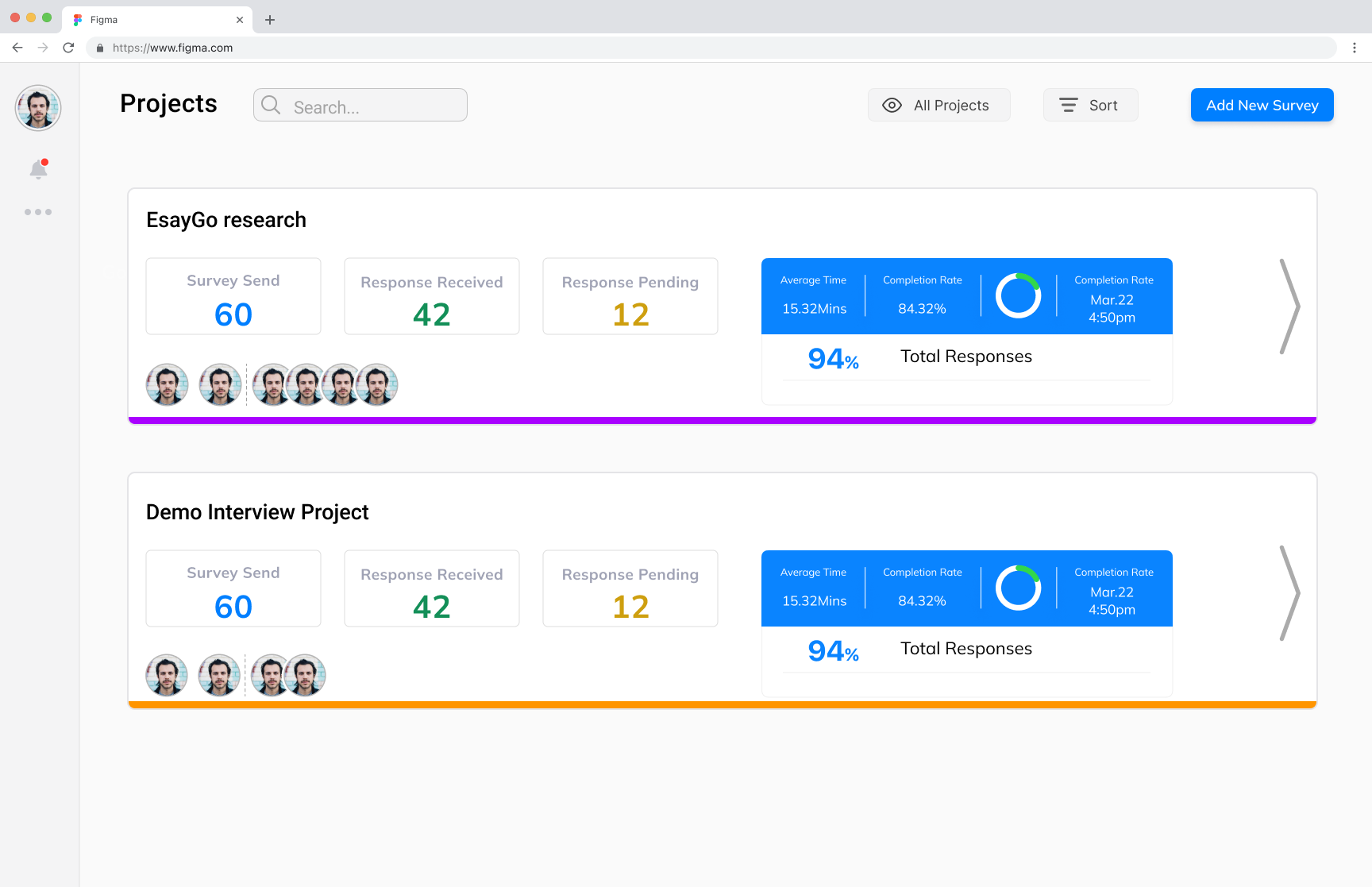

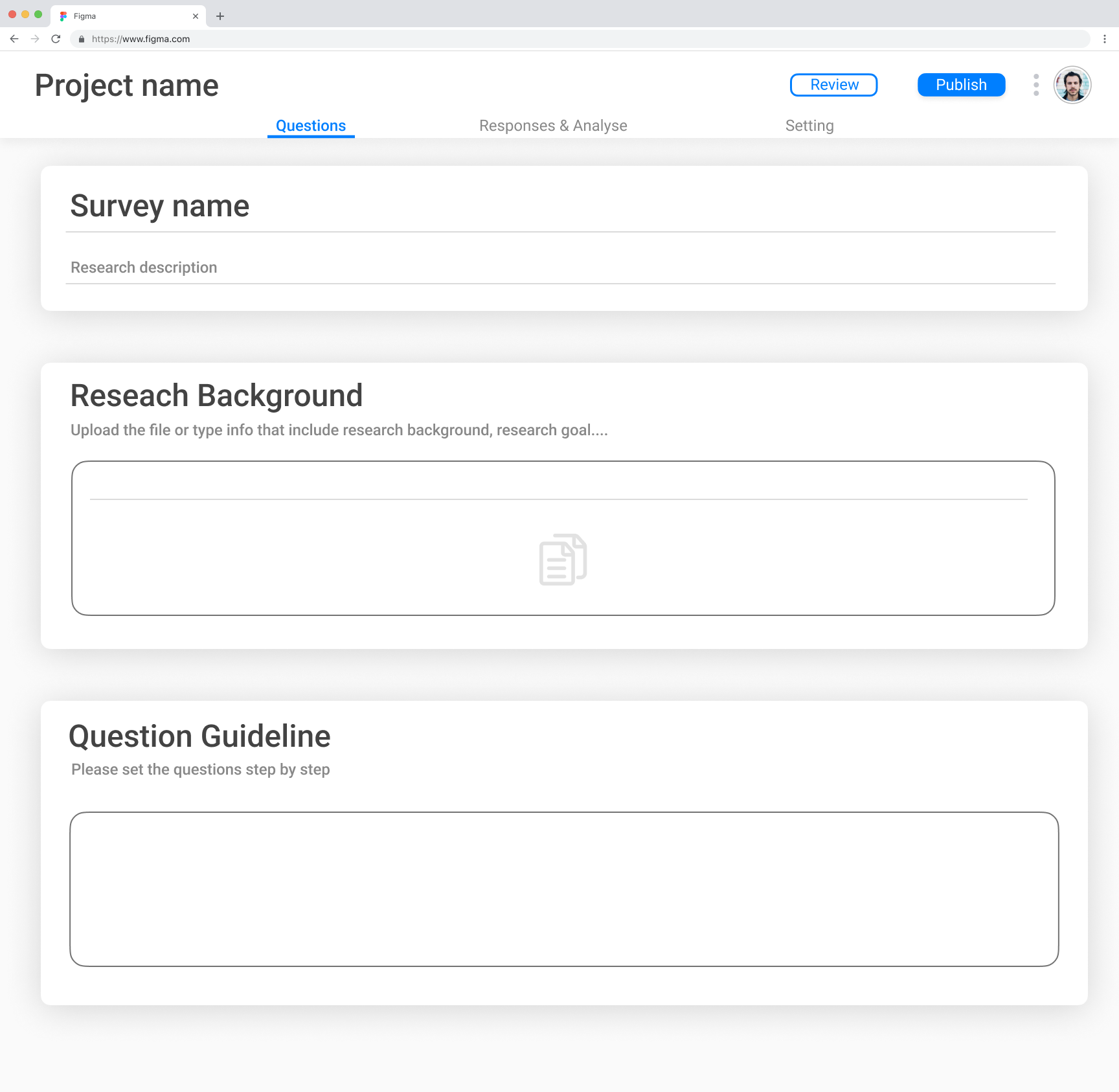

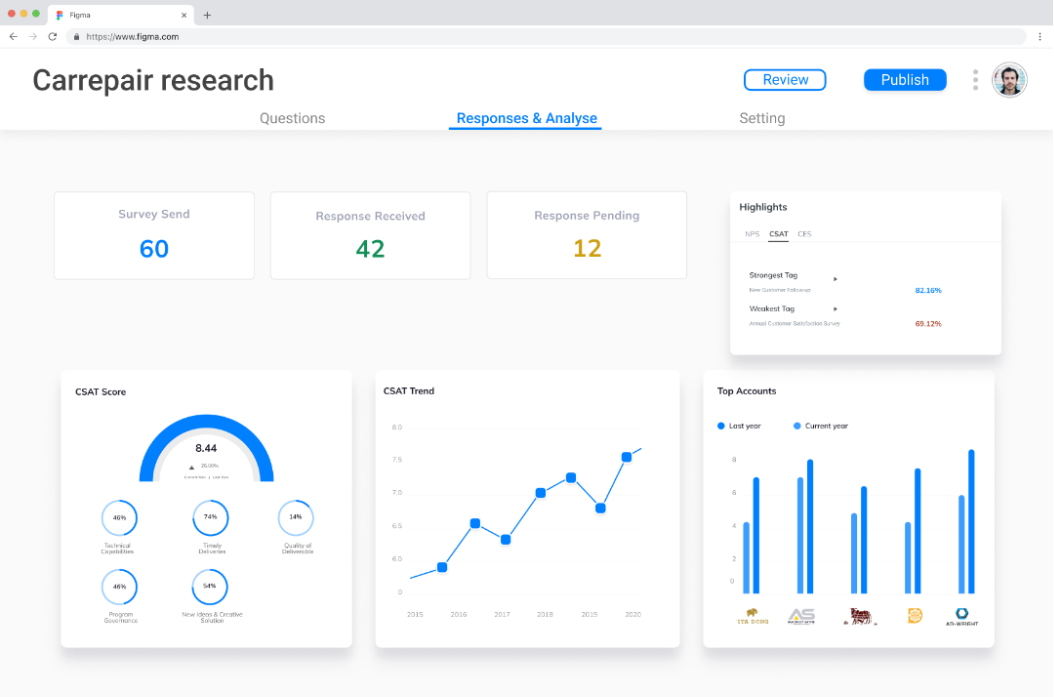

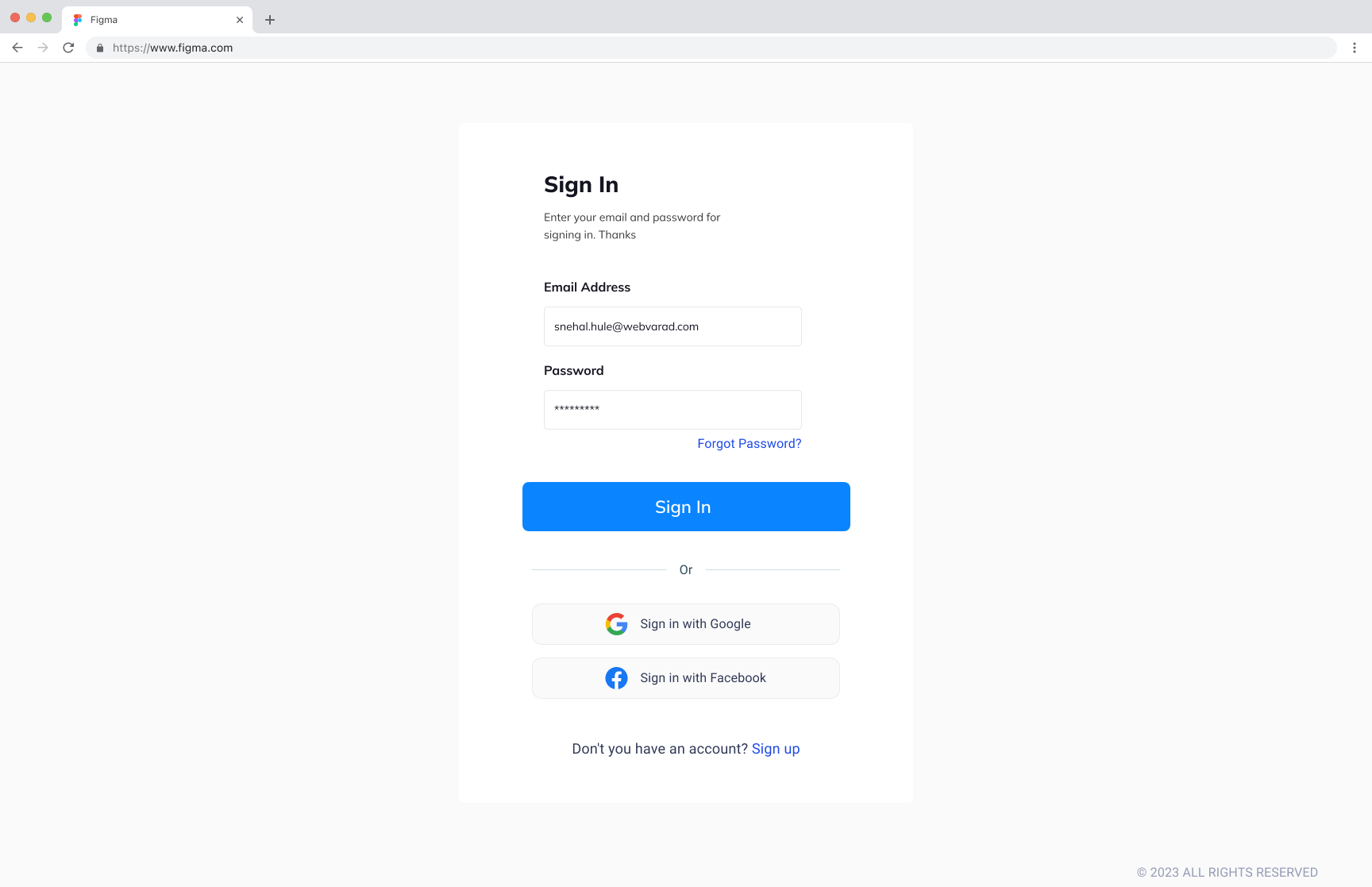

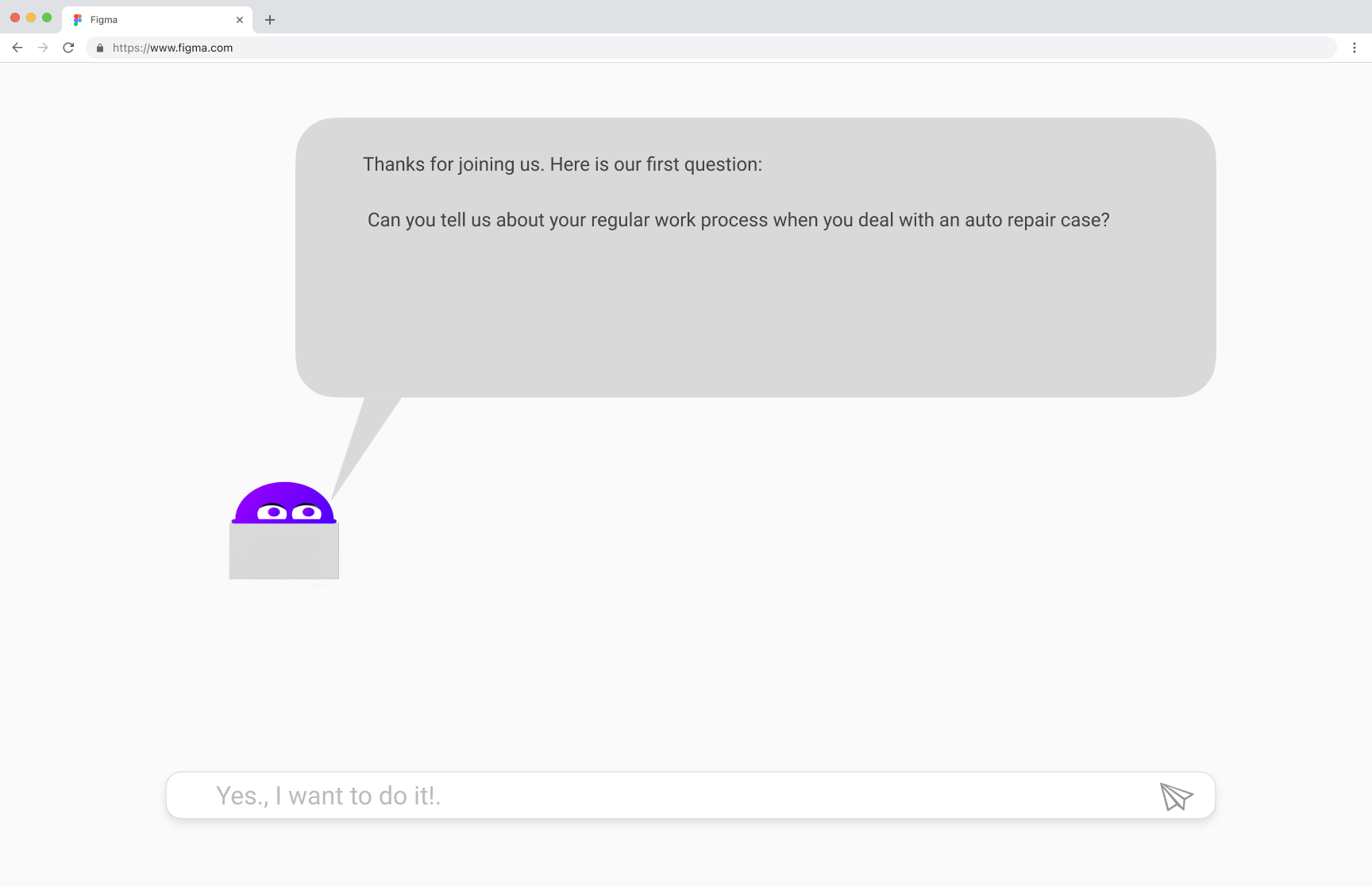

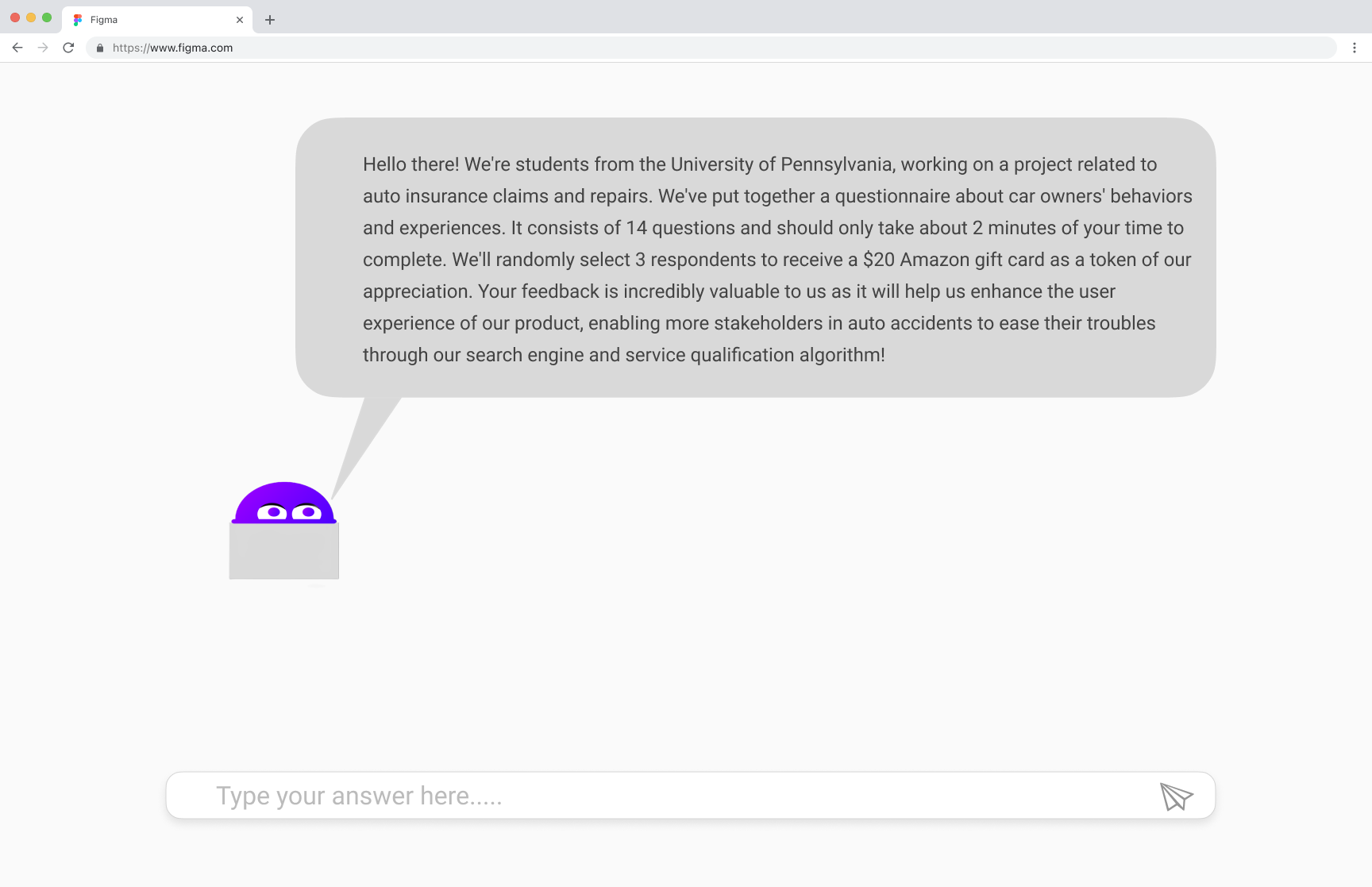

2. Hi-Fi Prototyping

Our high-fidelity Figma prototype includes two interfaces: the interviewer interface emphasizes ease of use for setting up, deploying, and analyzing survey data; the interviewee interface offers an intuitive, engaging survey process, designed to promote thoughtful and informative responses. With these separate yet integrated sections, our prototype seeks to elevate the entire survey experience.

Heuristic Evaluation

Our heuristic evaluation identified several areas for improvement to enhance the overall user experience of our survey system.

Clarity and Conciseness: Evaluators suggested emphasizing the survey's purpose and condensing the "Research Background" section for clarity. Additionally, it's essential to refine the wording of questions, replacing any complex terminology that might confuse interviewees.

NPC Functionality: The NPC interaction should feel natural and valuable. If evaluators found it distracting, we should consider streamlining its role or simplifying prompts.

Progress Tracking and Input: Clear progress indicators and intuitive input fields are essential. Feedback highlighting any confusion about survey length or difficulty entering responses will guide refinements to these elements.

Visuals and Error Handling: An aesthetically pleasing interface that guides navigation is important. Any specific feedback on visual elements should be incorporated. We'll also need to enhance error handling with informative messages.

NPC Timing and Support: If the NPC provides instructions or commentary, the timing must align seamlessly with the survey flow. We should also explore adding hints or explanations for various setting options within the question guidelines.

Help and documentation: The question guideline should have some hint or explanation for different setting buttons.

Revision Plan

After the evaluation, we made the following revision plan.

Text Clarity: Revise the survey's instructions and questions to simplify language and eliminate ambiguity.

NPC Interaction: Streamline or modify the NPC's role based on feedback. Evaluate its value proposition within the survey experience.

Progress Feedback: Enhance progress tracking using clearer indicators to inform users of their position in the survey.

Response Input: Explore alternative response mechanisms (dropdowns, sliders, etc.) to improve the intuitiveness of providing answers.

Visual Design: Optimize color scheme, font, and layout for readability and engagement, ensuring mobile responsiveness.

Error Handling: Craft clear and instructive error messages to guide users towards correct input.

To enhance the interview process, we added a progress bar and a skip question feature, allowing candidates to have a better understanding of their progress and exert greater control over their interview experience.

Reflection

Feedback revealed the interface's overall ease of use but also pinpointed opportunities to enhance the questionnaire's interactivity and clarity. We'll incorporate tooltips or modals to explain question purposes and the impact of skipping, empowering users with informed decision-making. Additionally, we'll refine the questionnaire's feedback mechanisms to provide greater acknowledgment of user input and progress, boosting engagement. It's clear from the tests that we need improved prompts, documentation, and perhaps tutorials to clarify LLM concepts and how they differ from traditional survey systems. Potentially showcasing output early in the process could further clarify the system's value. Long-term, we see immense potential in leveraging LLM to further assist users in question design and analysis.

On the interviewee side, while the added skip function enhanced user control, we'll address limited interaction with the character. Animations, visual enhancements, and the planned introduction of voice input should increase engagement, ease of use, and accessibility.

These insightful user responses drive our next steps, ensuring that the survey system is not just intuitive but truly empowers both interviewers and interviewees throughout the process.

3.Interactive Prototype

Our interactive prototype harnesses the power of AI and a suite of carefully selected technologies to deliver a revolutionary surveying experience. At its core, we've integrated ChatGPT, enabling dynamic question adaptation based on user input. This intelligent tailoring is coupled with real-time response analysis, extracting insights for researchers. To ensure a seamless user experience, we built a responsive UI using React. Flask powers our server-side logic, handling data flow and communication with the language model. We carefully fine-tuned the large language model (LLM) to optimize the quality and relevance of both generated questions and interpretive analysis. Additionally, custom-designed APIs facilitate efficient processing and integration of the rich data flowing from the LLM.

User Evaluation

We conducted an evaluation with two individuals experienced in taking surveys and with a keen interest in technology. Their backgrounds made them ideal for assessing both the usability and the novel AI features of our smart survey.

Key Findings

Participants responded positively to the dynamic questioning, finding it engaging. However, feedback indicated areas for improvement:

Sentiment Analysis: Results need enhanced clarity, with explanations or visual indicators to avoid user confusion.

Open-Ended Feedback:The interface for collecting open-ended feedback should be redesigned for greater ease of use and intuitiveness.

Lessons Learned

Lesson 1: Clearer sentiment analysis feedback is essential for a positive user experience.

Lesson 2: An intuitive interface for open-ended feedback collection boosts engagement and usability.

Risk

Technical Challenge: We recognize the potential challenge in fine-tuning the Large Language Model to consistently generate the quality of answers we desire.

Opportunities for Improvement

To address these findings, we'll focus on the following:

1. Sentiment Analysis Clarity: Improve result presentation for both ease of understanding and actionability.

2. Open-Ended Feedback Interface: Create a more streamlined experience for users to provide qualitative input.

3. Simplified Interface and Workflow: Focus on the core researcher and interviewee screens. Define clear workflows for each user type, spotlighting AI interactions.

4. Final Evaluation

Feedback revealed the interface's overall ease of use but also pinpointed opportunities to enhance the questionnaire's interactivity and clarity. We'll incorporate tooltips or modals to explain question purposes and the impact of skipping, empowering users with informed decision-making. Additionally, we'll refine the questionnaire's feedback mechanisms to provide greater acknowledgment of user input and progress, boosting engagement. It's clear from the tests that we need improved prompts, documentation, and perhaps tutorials to clarify LLM concepts and how they differ from traditional survey systems. Potentially showcasing output early in the process could further clarify the system's value. Long-term, we see immense potential in leveraging LLM to further assist users in question design and analysis.

On the interviewee side, while the added skip function enhanced user control, we'll address limited interaction with the character. Animations, visual enhancements, and the planned introduction of voice input should increase engagement, ease of use, and accessibility.

These insightful user responses drive our next steps, ensuring that the survey system is not just intuitive but truly empowers both interviewers and interviewees throughout the process.

Design Question

We sought to understand how we can maintain UI simplicity while enhancing user interaction, with a specific focus on the potential impact of a character/bot within the interviewee experience.

Study Plan

Design Question: Does the presence of a character positively impact the interviewee experience?

Independent Variable:

Version A: Interviewee interface includes character/bot with interaction elements.

Version B: Interviewee interface has a standard chat box without a character.

Feedback & Analysis

1. Correlation: Moderate positive correlation exists between noticing the character and less reported feeling of overwhelm. This suggests the character might play a role in calming users.

2. No Significant Impact: No statistically significant correlation between character interaction and positive sentiment scores. Further investigation is needed to determine if a causal relationship exists.

3. Design Insights: Users often notice the character, but color recognition and interaction rates need improvement. Sentiment is somewhat positive (especially regarding overwhelm), but the character can cause distraction and confusion.

Conclusions & Next Steps

To improve the design, we focused on:

1. Visual Prominence: Increase the character's visibility for better color recognition.

2. Intuitive Interaction: Implement interaction mechanisms that directly promote positive feelings.

3. Minimizing Distraction: Reduce any distraction or confusion caused by the character, while maintaining its ability to ease potential anxiety.

Throughout this project, we developed an AI-powered survey system that breaks the constraints of traditional platforms. From our initial concept, iterative prototypes, rigorous user testing, and a final statistical evaluation, we've uncovered valuable lessons and opportunities. The dynamic questioning powered by our fine-tuned language model proved highly engaging for interviewees. Our results demonstrated the potential of a character/bot to reduce user anxiety during the interview process. Moving forward, we'll prioritize enhancing the character's visual prominence, creating intuitive and meaningful interactions, and striking the delicate balance between assistance and distraction. Ultimately, this project showcases the power of AI in revolutionizing user research methodologies, and we're excited to continue refining our solution to deliver truly user-centric and insightful survey experiences.